Introduction

KFServing is now KServe

KFServing was renamed to KServe in September 2021, when the kubeflow/kfserving GitHub repository was transferred to the independent KServe GitHub organization.

To learn about migrating from KFServing to KServe, see the migration guide and the blog post.

What is KServe?

KServe is an open-source project that enables serverless inferencing on Kubernetes. KServe provides performant, high abstraction interfaces for common machine learning (ML) frameworks like TensorFlow, XGBoost, scikit-learn, PyTorch, and ONNX to solve production model serving use cases.

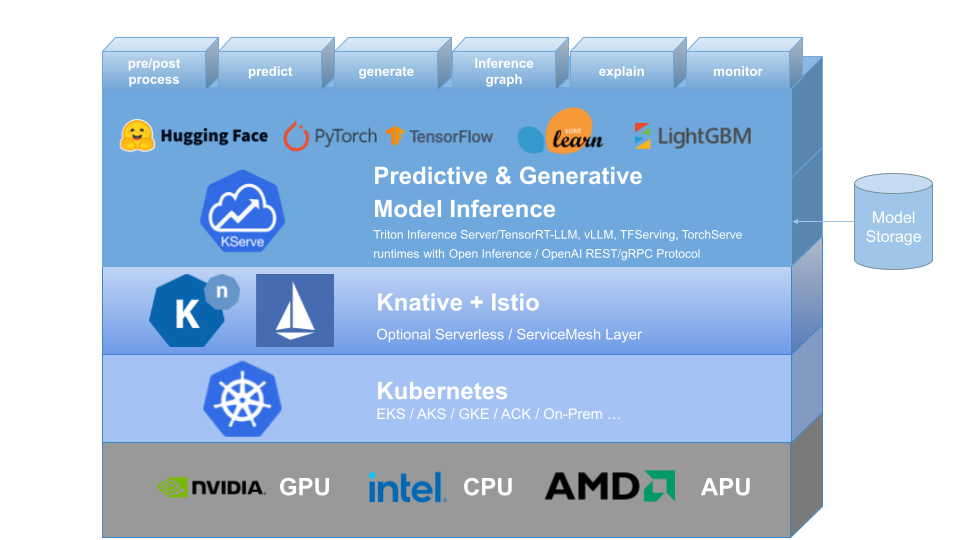

The following diagram shows the architecture of KServe:

KServe provides the following functionality:

- Provide a Kubernetes Custom Resource Definition for serving ML models on arbitrary frameworks.

- Encapsulate the complexity of autoscaling, networking, health checking, and server configuration to bring cutting edge serving features like GPU autoscaling, scale to zero, and canary rollouts to your ML deployments.

- Enable a simple, pluggable, and complete story for your production ML inference server by providing prediction, pre-processing, post-processing and explainability out of the box.

How to use KServe with Kubeflow?

Kubeflow provides Kustomize installation files in the kubeflow/manifests repo with each Kubeflow release.

However, these files may not be up-to-date with the latest KServe release.

See KServe on Kubeflow with Istio-Dex from the KServe/KServe repository for the latest installation instructions.

Kubeflow also provides the models web app to manage your deployed model endpoints with a web interface.

Feedback

Was this page helpful?

Thank you for your feedback!

We're sorry this page wasn't helpful. If you have a moment, please share your feedback so we can improve.