Katib Experiment Lifecycle

Katib Experiment Lifecycle

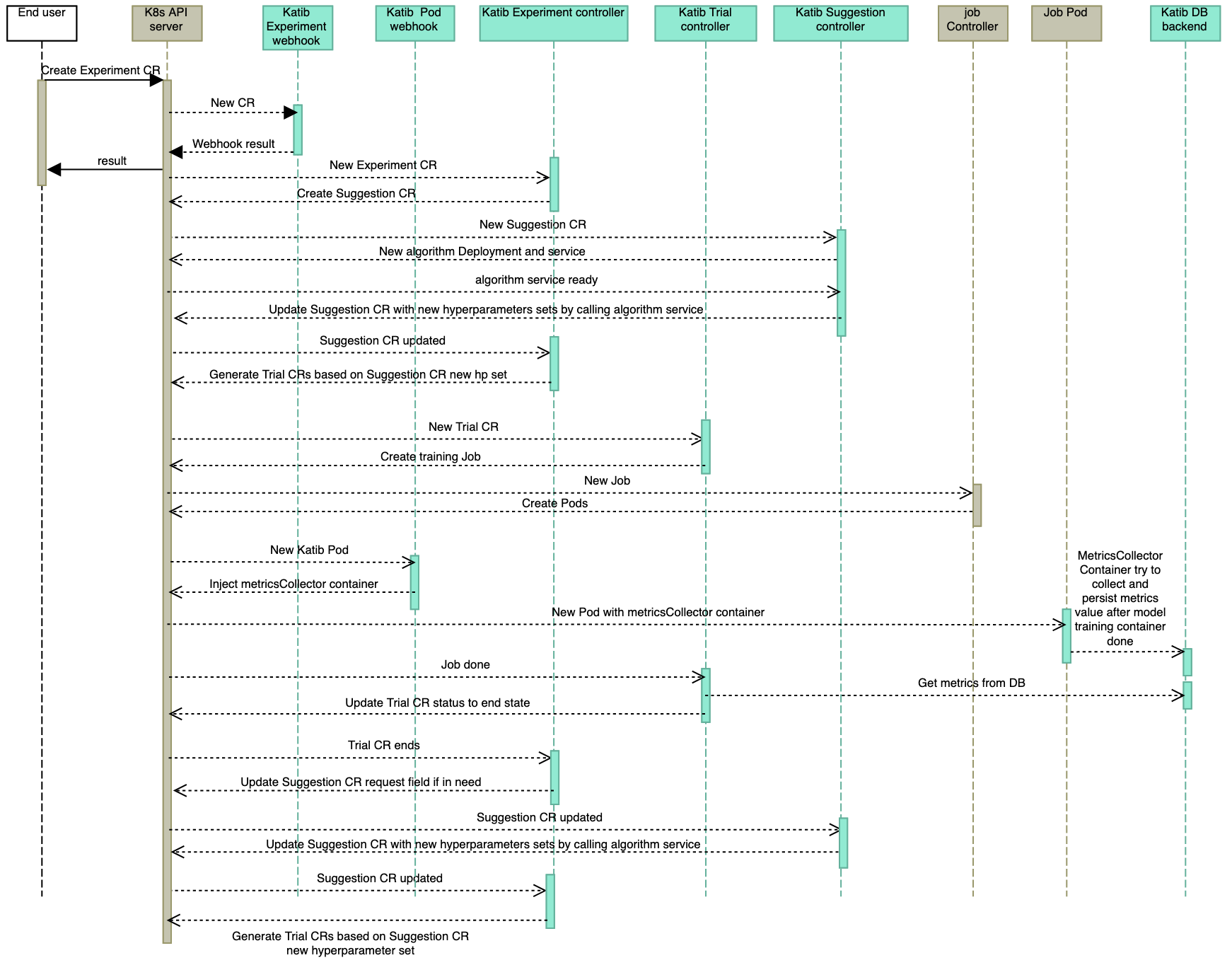

When user creates an Experiment, Katib Experiment controller, Suggestion controller and Trial controller is working together to achieve hyperparameters tuning for user’s Machine learning model. The Experiment workflow looks as follows:

The Experiment is submitted to the Kubernetes API server. Katib Experiment mutating and validating webhook is called to set the default values for the Experiment and validate the CR separately.

The Experiment controller creates the Suggestion.

The Suggestion controller creates the algorithm deployment and service based on the new Suggestion.

When the Suggestion controller verifies that the algorithm service is ready, it calls the service to generate

spec.request - len(status.suggestions)sets of hyperparameters and append them intostatus.suggestions.The Experiment controller finds that Suggestion had been updated and generates each Trial for the each new hyperparameters set.

The Trial controller generates

Worker Jobbased on therunSpecfrom the Trial with the new hyperparameters set.The related job controller (Kubernetes batch Job, Kubeflow TFJob, Tekton Pipeline, etc.) generates Kubernetes Pods.

Katib Pod mutating webhook is called to inject the metrics collector sidecar container to the candidate Pods.

During the ML model container runs, the metrics collector container collects metrics from the injected pod and persists metrics to the Katib DB backend.

When the ML model training ends, the Trial controller updates status of the corresponding Trial.

When the Trial goes to end, the Experiment controller increases

requestfield of the corresponding Suggestion if it is needed, then everything goes tostep 4again. Of course, if the Trial meet one ofendcondition (exceedsmaxTrialCount,maxFailedTrialCountorgoal), the Experiment controller takes everything done.

Feedback

Was this page helpful?

Thank you for your feedback!

We're sorry this page wasn't helpful. If you have a moment, please share your feedback so we can improve.